Australia's Digital Iron Curtain: The World-First Ban on Social Media for Under-16s and the Looming Age-Verification Crisis

Australia’s Digital Iron Curtain: The World’s First Ban on Social Media for Under-16s Takes Hold

In a move that has sent shockwaves through the global tech landscape and divided families across the continent, Australia’s landmark legislation to ban social media accounts for minors under the age of 16 officially entered force. The *Online Safety Amendment (Social Media Minimum Age) Act 2024*, which took effect on December 10, 2025, marks a seismic shift in how governments are choosing to regulate the digital lives of their youngest citizens, drawing a bold line in the sand against the relentless pull of Big Tech.

The new reality applies to a constellation of platforms where teenage life has largely played out for the past decade, including giants like TikTok, Instagram, Facebook, YouTube, Snapchat, X, Twitch, Reddit, and Threads. The intent is clear, yet the implementation is proving to be a complex, emotionally charged debate. The core of the law does not penalise the young users themselves or their parents, but instead drops a financial hammer on the platforms: those who fail to take *“reasonable steps”* to prevent or remove accounts held by Australian residents under 16 face astronomical penalties, up to AU$49.5 million (approximately US$33 million) per serious or repeated breach.

The Desperate Rationale: From Grieving Parents to Government Policy

The motivation behind this radical policy is rooted in profound distress. It is a desperate, legislative prayer offered up by a society grappling with a youth mental health crisis increasingly linked to the pernicious side effects of algorithmic addiction and digital abuse. Driven in part by the tireless advocacy of grieving mothers—whose children tragically took their own lives following relentless online bullying and exposure to harmful content—Prime Minister Anthony Albanese framed the ban as a stand against platforms that profit from children’s attention.

For many parents, this law is a welcome, even *cathartic*, release from a battle they felt ill-equipped to fight. They hope this forced digital detox will resurrect authentic, face-to-face friendships, improve sleep, and reduce the anxiety-inducing pressures of curated online perfection. UNICEF Australia acknowledges the protective intent, calling it a step toward safer digital spaces, but also suggests the true remedy should focus on fundamentally improving safety features, not simply delaying access. This dichotomy is the essence of the reform: a paternalistic intervention designed to protect a generation from a social experiment they never consented to.

The Digital Exodus and the Pursuit of Loopholes

The legislation’s implementation has been met with a wave of dismay, frustration, and ingenuity from the youth it is intended to shield. I can’t really imagine giving it up completely, lamented one teen in Germany, a feeling echoed by thousands of Australian under-16s. Leading up to the December 10 deadline, many posted mournful farewell messages, a digital wake for their online lives. A significant national survey revealed that the vast majority of young Australians not only opposed the ban but also stated a firm intention to bypass it.

The immediate aftermath has seen an inevitable and predictable surge in workarounds: children are using Virtual Private Networks (VPNs) to mask their location, creating new accounts with falsified birth dates, or, most commonly, simply borrowing their parents’ login credentials. It is a high-stakes, digital game of Whac-A-Mole, where the government and tech companies are the lumbering players and the nimble, tech-savvy teenagers are finding the soft spots in the system. Critics argue that forcing minors onto less regulated, underground networks—where moderation is non-existent—actually exacerbates the very danger the law was meant to mitigate.

Age Verification: A Privacy Conundrum

The entire efficacy of this world-first ban hinges on the platforms' ability to take *“reasonable steps”* to verify age, a requirement that has cast a blinding spotlight on the uncomfortable, complex realm of age-assurance technology. The eSafety Commissioner, tasked with enforcement, has offered a deliberately flexible standard, avoiding prescription of a single technology.

However, the methods being considered—which include AI-driven facial scans, voice analysis, and third-party document verification—have triggered intense debate. Trials revealed that AI tools were not *“guaranteed to be effective,”* sometimes proving inaccurate by as much as *“plus or minus 18 months,”* a glaring margin of error that could penalise a 16-year-old or allow a 14-year-old through. More alarmingly, these robust age-verification methods raise significant privacy issues: to protect a child’s privacy from an algorithm, the government may be mandating the collection of their biometric data, trading one digital risk for another in a stunning paradox. This is the heart of the Type 2 Systems Thinking challenge: a solution intended for safety may generate a new, unforeseen privacy hazard.

A Global Test Case: Blueprint or Cautionary Tale?

Australia’s audacious gambit is being watched with rapt attention worldwide. Governments in the UK, Denmark, New Zealand, Malaysia, and France are actively considering or drafting similar legislation. As a “world-first social experiment”, the ban is both a beacon of hope for advocates of child protection and a source of profound concern for civil liberties groups. The coming months will be a crucial testing ground to determine if a government-mandated digital disconnect can truly foster well-being, or if it will simply teach a new generation how to be even *more* adept at circumventing the rules. The debate rages on: is this the visionary blueprint for a safer digital future, or a well-intended, yet flawed, first draft?

See you on the other side.

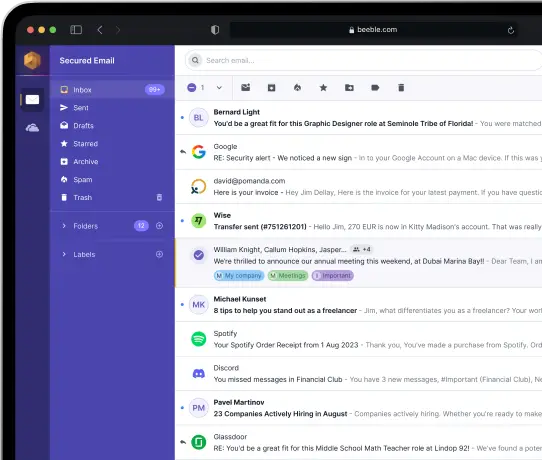

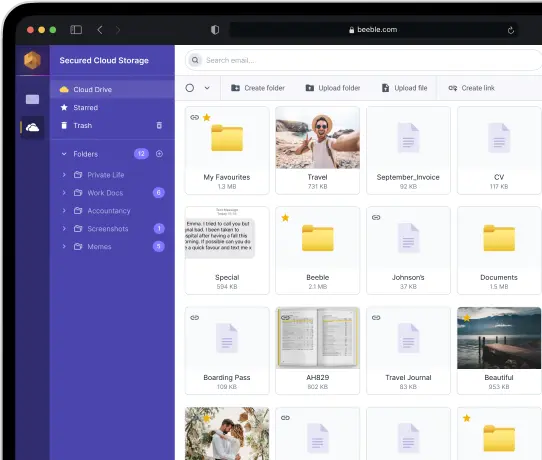

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account