India Mandates 3-Hour Deepfake Takedown: New IT Rules Demand Swift Platform Action and AI Content Labeling

The Indian government has significantly tightened its digital governance framework, notifying sweeping amendments to its Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. The new mandate requires social media platforms to remove deepfakes and other forms of unlawful content within as little as three hours of receiving a takedown request, a drastic reduction from the previous 36-hour deadline. Effective from February 20, 2026, these new rules place an unprecedented burden of speed and technical diligence on major technology intermediaries to curb the rise of synthetic media.

The 180-Minute Deadline: A Drastic Shift in Takedowns

The most immediately impactful change is the severely compressed timeframe for content removal. Under the amended rules, platforms must act with warp speed in response to legal challenges. Where content is flagged as illegal by a competent government authority or a court order, intermediaries must ensure its removal or disable access within three hours. This mandate encompasses a broad range of unlawful material, including content linked to serious crimes and deceptive impersonation.

However, the rules impose an even tighter, more urgent deadline for the most sensitive violations. For content featuring non-consensual intimate imagery (NCII), such as deepfake nudity, or material exposing private areas, the takedown deadline is reduced to just two hours. This reflects a regulatory recognition that in cases of sexual exploitation, every minute of exposure can cause severe, lasting trauma to the victim.

Beyond Removal: Mandatory Labeling and Digital Provenance

The government has not only focused on swift removal but also on preventing the deceptive spread of synthetic media. For the first time, Indian law provides a formal, technical definition for “Synthetically Generated Information” (SGI). This SGI, which includes AI-generated audio, video, and visual content that appears indistinguishable from a real person or event, is now subject to mandatory disclosure and traceability requirements.

Intermediaries must now ensure that all SGI is clearly and prominently labeled. This is designed to alert users immediately to the synthetic nature of the content they are viewing. Furthermore, platforms that enable the creation or dissemination of SGI are required to embed persistent metadata or provenance markers into the file itself, where technically feasible. This digital fingerprint allows investigators and regulators to trace the content back to its point of origin, even if the file is copied and shared across different platforms. Crucially, the rules explicitly bar the removal or suppression of these AI labels or associated metadata.

The Platform’s New Due Diligence Checklist

For major social media platforms—referred to as Significant Social Media Intermediaries—the compliance requirements are extensive. The new rules elevate due diligence from a reactive policing model to a preventative one.

Key Due Diligence Requirements for Platforms:

- User Declaration: Before a user publishes content, the platform must require them to explicitly declare whether the content was generated or altered using artificial intelligence.

- Technical Verification: Platforms cannot simply rely on self-declaration. They are legally mandated to deploy “reasonable and appropriate technical measures,” including automated tools, to cross-verify the user's claim and detect illegal deepfake content.

- Proactive Prevention: Platforms must utilize automated tools to actively prevent the promotion or amplification of categories of illegal, deceptive, and sexually exploitative AI content, such as child sexual abuse material and non-consensual imagery.

Why the Urgency? The Safe Harbour Stakes

The driving force behind this regulatory acceleration is the increasing threat of deepfakes being weaponized for fraud, misinformation, political manipulation, and severe personal harassment, a concern that intensified throughout 2025. The stakes for non-compliance are higher than ever.

Historically, social media platforms have been protected by 'Safe Harbour' provisions (Section 79 of the IT Act), which shield them from liability for content posted by their users. The new amendments introduce a critical condition: if an intermediary fails to meet the obligations of the IT Rules—for instance, by knowingly letting unlabelled SGI slide or by missing the newly compressed takedown windows—they risk losing their Safe Harbour protection. This legal vulnerability could open the door for platforms to be sued as if they were the content creators themselves, representing a significant liability shift for Big Tech in the Indian market.

Practical Takeaways for Creators and Users

With these new rules taking effect on February 20, 2026, the digital ecosystem in India is set for a structural change.

For the User and Creator:

- Declare Your AI Content: If you use any generative AI tool to create or significantly alter audio, video, or images, you will likely be required to declare this before publishing on major social platforms.

- Understand Exemptions: Routine editing—such as color correction, noise reduction, or minor formatting that does not materially distort the original meaning—is generally exempt from SGI classification.

- Report Swiftly: If you encounter harmful content, especially non-consensual intimate imagery or a deceptive impersonation deepfake, report it immediately to the platform’s grievance officer. With the response time for sensitive content now at two hours, speed matters critically.

For Tech Innovators and Platforms:

- Compliance by Design: Developers of AI tools and content platforms operating in India must integrate mandatory labeling and metadata embedding into their product design from the ground up.

- Automate Moderation: The shift from 36 hours to two or three hours demands a massive scaling up of automated detection and moderation tools, backed by significantly increased human content moderation staff to handle complex or disputed cases within the tight timeframe.

India’s new framework represents one of the world's most aggressive regulatory responses to deepfakes, establishing an operational benchmark that will test the technical capability and resource deployment of global social media giants.

See you on the other side.

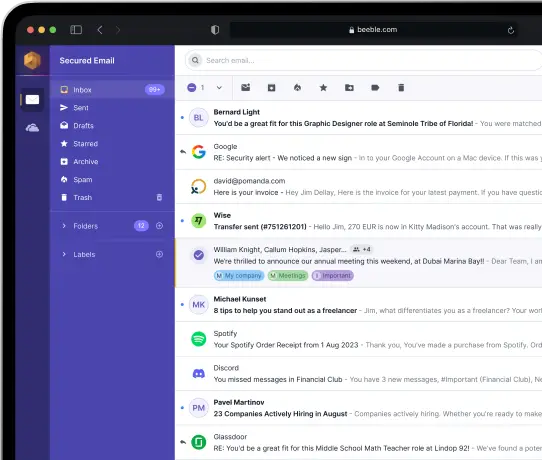

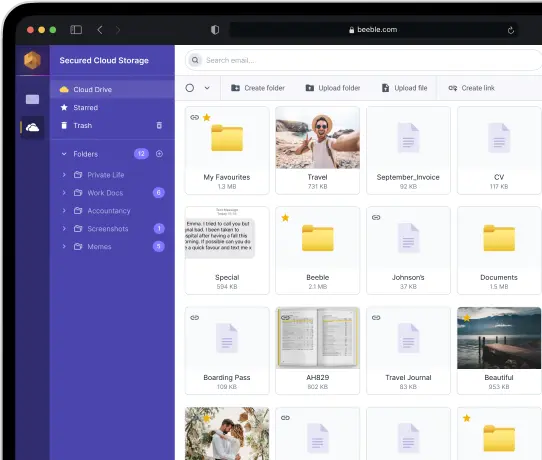

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account