Navigating the AI Frontier: Why Effective Governance is the New Competitive Advantage

As we move through the first quarter of 2026, the conversation surrounding Artificial Intelligence has shifted. We are no longer debating whether AI will change the world—that transformation is well underway. Instead, the focus has sharpened on a more difficult question: How do we build the guardrails necessary to protect our society without stifling the very innovation that promises to cure diseases and stabilize our climate?

This is the era of AI governance. It is a period defined by the realization that trust is not just a moral requirement but a market necessity. For organizations and nations alike, the path forward requires a delicate dance between embracing the "promise" of hyper-efficiency and mitigating the "peril" of unchecked algorithmic power.

The Dual-Faced Nature of Intelligence

To understand the urgency of governance, one must look at the sheer breadth of AI’s impact. In healthcare, generative models are now designing proteins in seconds that once took decades to map, leading to personalized cancer vaccines currently in Phase III trials. In the energy sector, AI-driven smart grids are managing the intermittency of renewables with a precision that has reduced carbon footprints in major cities by nearly 15% over the last two years.

Yet, this same power has a shadow. The ease with which synthetic media can be generated has made digital identity more fragile than ever. We’ve seen the rise of "automated social engineering," where sophisticated AI agents can conduct phishing attacks at a scale and personalization level previously unimaginable. Without a structured framework, the tools meant to liberate human potential could inadvertently erode the foundations of privacy and autonomy.

The 2026 Regulatory Landscape: From Theory to Enforcement

In 2026, we are seeing the first real "teeth" of international AI policy. The European Union’s AI Act is now in full implementation, categorizing systems by risk levels and imposing strict transparency requirements on "High-Risk" applications. Meanwhile, in the United States, the shift from voluntary commitments to agency-led enforcement has created a patchwork of sector-specific rules—the SEC monitors AI in trading, while the FDA scrutinizes AI in diagnostics.

This regulatory environment is no longer a distant cloud on the horizon; it is the ground on which companies must build. The challenge for many is that regulation often moves at a linear pace, while AI capability grows exponentially. Effective governance, therefore, cannot be a static set of rules. It must be a living framework that evolves alongside the technology it seeks to manage.

Innovation vs. Compliance: The Great Balancing Act

A common fear among tech leaders is that heavy-handed regulation will hand a competitive advantage to regions with fewer restrictions. However, a different narrative is emerging. Much like the early days of the internet, where secure protocols (like HTTPS) actually enabled the e-commerce boom, robust AI governance is becoming an enabler of growth.

When a system is governed effectively, it becomes "predictable." Investors are more likely to fund a startup that can prove its models are free from catastrophic bias or legal liability. Consumers are more likely to share data with a service that operates under a transparent, audited framework. In this light, governance isn't a brake—it’s the steering wheel that allows the car to go faster safely.

The Technical Guardrails: Red-Teaming and Watermarking

Governance isn't just about legal text; it’s about technical implementation. In 2026, the industry has standardized several key safety measures:

- Adversarial Red-Teaming: Before a frontier model is released, it is subjected to rigorous "stress tests" by independent third parties to find vulnerabilities in its logic or safety filters.

- Provenance and Watermarking: To combat deepfakes, major platforms have adopted cryptographic standards that tag AI-generated content at the source, allowing users to verify whether a video or image is authentic.

- Algorithmic Auditing: Companies are now using "AI to watch AI," employing specialized monitoring tools that alert human supervisors the moment a model begins to "drift" or exhibit biased behavior in real-world scenarios.

Practical Takeaways for Organizations

For businesses and developers navigating this landscape, the transition to governed AI can be daunting. Here is a practical checklist for moving forward:

- Inventory Your AI: You cannot govern what you don't track. Create a central registry of every AI model and third-party API being used across your organization.

- Establish a Cross-Functional Committee: Governance shouldn't live solely in the IT department. Include legal, ethics, and product teams to ensure a holistic view of risk.

- Prioritize Transparency: Be clear with your users about when they are interacting with an AI and how their data is being used to train or refine those models.

- Adopt "Safety by Design": Don't treat ethics as a final check. Integrate bias testing and privacy-preserving techniques (like differential privacy) into the earliest stages of development.

The Path Forward

Effective AI governance is not about reaching a final destination; it is a continuous process of calibration. As we look toward the end of the decade, the goal is to create a world where the benefits of AI—from solving the climate crisis to democratizing education—are accessible to all, while the risks are managed by a global community that values human agency above all else.

By treating governance as a foundational pillar of innovation rather than a bureaucratic hurdle, we ensure that the path to the future remains both bright and secure.

Sources

- European Commission: The AI Act (Official Documentation)

- NIST: AI Risk Management Framework (AI RMF 1.0)

- OECD: AI Policy Observatory and Principles

- Stanford Institute for Human-Centered AI (HAI): 2025/2026 AI Index Report

- ISO/IEC 42001: Information technology — Artificial intelligence — Management system

See you on the other side.

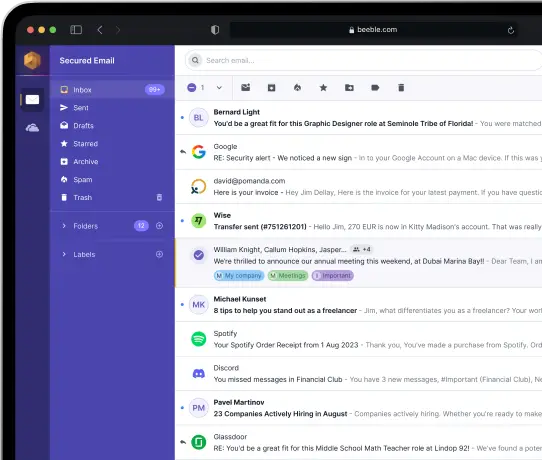

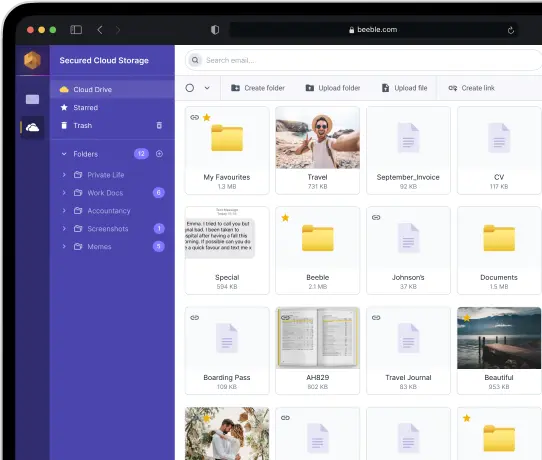

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account