Spain Orders Investigation Into X, Meta, and TikTok Over AI-Generated Child Abuse Material

Spain Takes Action Against Tech Giants

Spain has directed prosecutors to launch investigations into major social media platforms X (formerly Twitter), Meta, and TikTok over allegations that AI-generated child sexual abuse material has been distributed through their services. This marks a significant escalation in European efforts to hold technology companies accountable for harmful and illegal content appearing on their platforms.

The move comes as regulators across the European Union intensify their scrutiny of how tech companies moderate content, particularly as artificial intelligence makes it easier to create realistic yet synthetic images and videos. Spain's decision signals that governments are no longer willing to wait for platforms to self-regulate when it comes to protecting children online.

The Growing Threat of AI-Generated Abuse Material

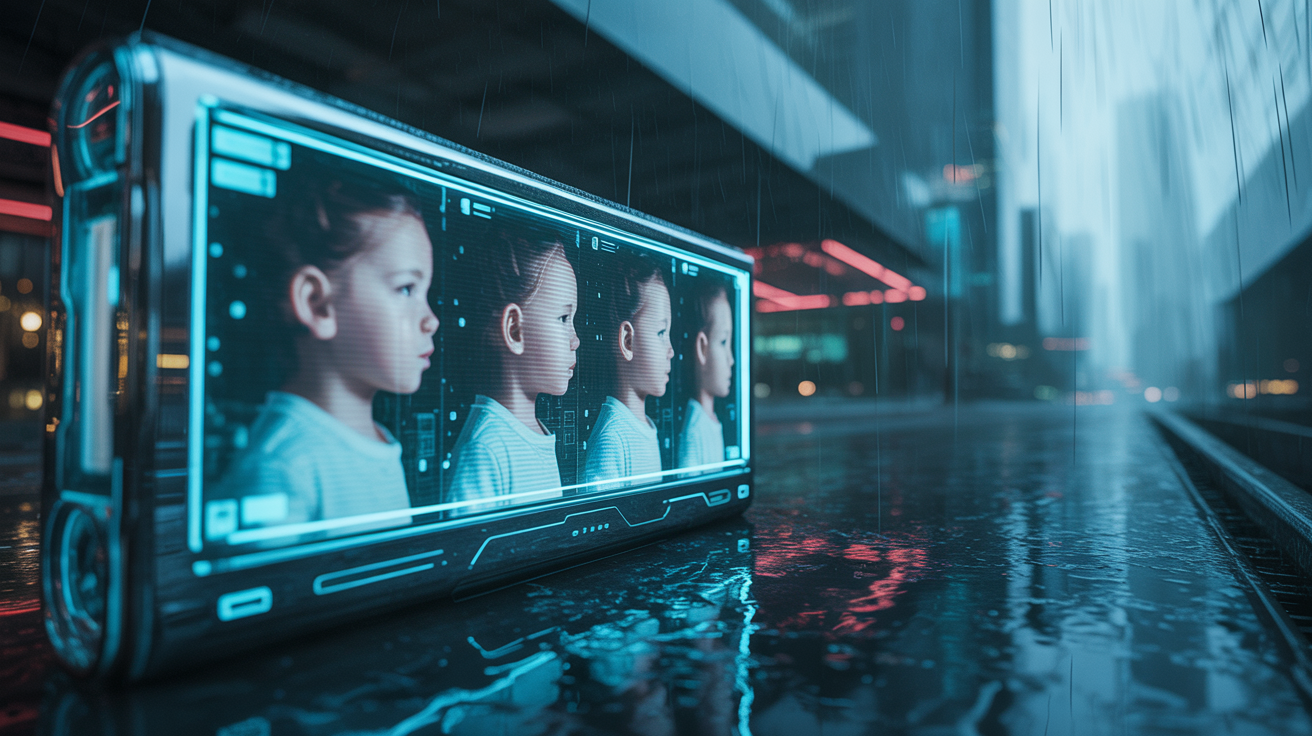

Artificial intelligence has transformed the landscape of online safety threats. Modern generative AI tools can create photorealistic images and videos that are increasingly difficult to distinguish from authentic content. While these technologies have legitimate uses in entertainment, design, and education, they've also opened a disturbing new avenue for creating child sexual abuse material without directly harming a child during production.

However, experts and law enforcement agencies emphasize that such material is still deeply harmful. It normalizes the sexualization of children, can be used to groom real victims, and makes it harder for investigators to identify and rescue actual victims by flooding detection systems with synthetic content. The existence of this material, regardless of its origin, perpetuates a market for child exploitation.

The Spanish investigation focuses on whether X, Meta, and TikTok have adequate safeguards to detect and remove this AI-generated content, and whether they've been sufficiently responsive to reports of such material appearing on their platforms.

Legal Framework Under the Digital Services Act

Spain's action takes place within the context of the European Union's Digital Services Act (DSA), which came into full effect in 2024. This landmark legislation requires online platforms to take responsibility for illegal content and implement robust systems to identify, remove, and prevent the spread of harmful material.

Under the DSA, very large online platforms—those with more than 45 million monthly active users in the EU—face particular obligations. They must conduct risk assessments, implement mitigation measures, and undergo independent audits. X, Meta (which operates Facebook, Instagram, and WhatsApp), and TikTok all fall into this category.

Failure to comply with DSA requirements can result in fines of up to six percent of a company's global annual revenue. For Meta, which reported over $130 billion in revenue for 2024, that could translate to billions of dollars in penalties. The stakes are enormous, both financially and reputationally.

How Platforms Are Responding

The three companies targeted by Spain's investigation have all publicly committed to combating child sexual abuse material, though their approaches and track records vary.

Meta has invested significantly in detection technology, using a combination of AI-powered scanning tools and human moderators. The company reports millions of pieces of child exploitation content to the National Center for Missing and Exploited Children (NCMEC) annually. However, critics argue that Meta's systems still allow too much harmful content to slip through, particularly on its encrypted messaging services.

X, under Elon Musk's ownership, has faced increased scrutiny over content moderation. The platform dramatically reduced its trust and safety teams in 2023, raising concerns about its capacity to detect and remove illegal content. While X maintains it has zero tolerance for child sexual abuse material, several research organizations have documented instances where reported content remained accessible for extended periods.

TikTok has emphasized its combination of automated detection and human review, along with partnerships with child safety organizations. The platform has also implemented features designed to protect younger users, such as default privacy settings for accounts belonging to minors. Nevertheless, the sheer volume of content uploaded daily—hundreds of millions of videos—presents enormous challenges for any moderation system.

What This Means for Tech Regulation

Spain's investigation represents a broader shift in how governments approach platform accountability. Rather than treating social media companies as neutral intermediaries, regulators increasingly view them as publishers with editorial responsibility for the content they host and amplify.

This case could set important precedents for several reasons. First, it specifically addresses AI-generated content, an area where regulations and enforcement practices are still evolving. Second, it demonstrates that individual EU member states are willing to pursue investigations independently, even as the European Commission conducts its own DSA enforcement actions.

The investigation also highlights a tension at the heart of content moderation: balancing user privacy with safety. End-to-end encryption, which protects user communications from surveillance, also makes it harder to detect illegal content. Finding solutions that preserve privacy while preventing abuse remains one of the most challenging problems in tech policy.

Practical Implications for Users and Parents

While platforms and regulators work through these issues, parents and users can take several steps to enhance online safety:

Enable built-in safety features. All three platforms offer parental controls, restricted modes, and content filters. Take time to configure these settings appropriately for younger users.

Maintain open communication. Talk with children about what they encounter online and create an environment where they feel comfortable reporting concerning content or interactions.

Report suspicious content immediately. All major platforms have reporting mechanisms. Use them. Reports help train detection systems and alert human moderators to problematic content.

Stay informed about platform policies. Companies regularly update their safety features and content policies. Reviewing these changes helps you understand what protections are in place.

Consider third-party monitoring tools. Various parental control applications can provide additional layers of protection and visibility into how children use social media.

What Happens Next

Spain's prosecutors will now gather evidence to determine whether the platforms violated Spanish law or EU regulations. This process could take months or even years. If investigators find violations, the companies could face substantial fines, orders to change their practices, or both.

Meanwhile, other European countries are watching closely. Similar investigations could launch elsewhere if Spain's inquiry uncovers systemic failures. The European Commission, which has primary responsibility for enforcing the DSA against very large platforms, may also intensify its scrutiny based on Spain's findings.

For the broader tech industry, this case underscores that AI-generated content will receive the same serious treatment as traditional illegal material. As generative AI capabilities continue to advance, platforms will need to invest heavily in detection technologies that can identify synthetic content and distinguish it from legitimate uses of AI.

The investigation also raises questions about the future of content moderation itself. With billions of pieces of content posted daily across major platforms, can any combination of AI and human moderators truly keep pace? Some experts argue that fundamental changes to how platforms operate—such as limiting algorithmic amplification of content that hasn't been reviewed—may be necessary to adequately address these challenges.

Sources

- European Commission official documentation on the Digital Services Act

- Meta Transparency Center reports on child safety measures

- TikTok Safety Center policy documentation

- X Safety Center guidelines and enforcement reports

- National Center for Missing and Exploited Children (NCMEC) reporting statistics

- Technology policy research from Stanford Internet Observatory

- European Union regulatory enforcement announcements

See you on the other side.

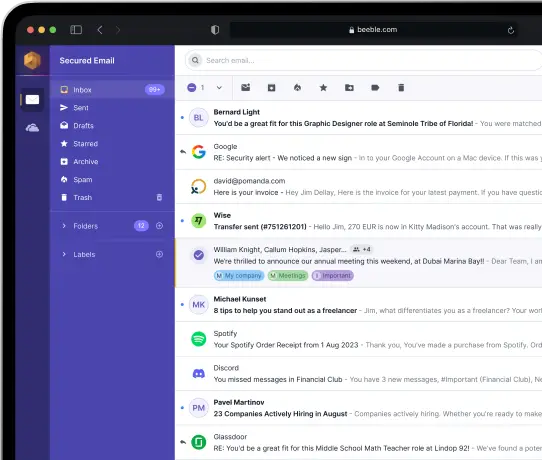

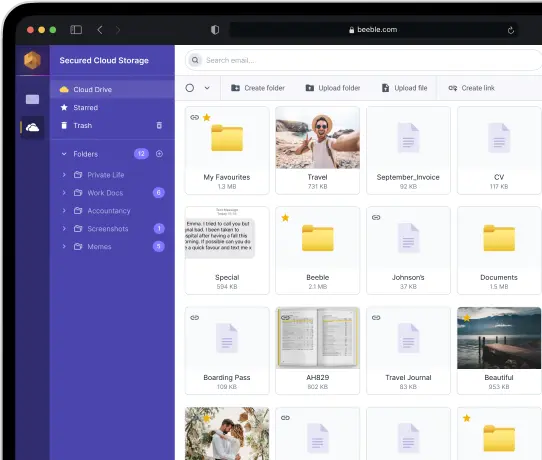

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account