The Digital Playground: Redefining Children’s Rights in a Data-Driven Economy

For decades, the internet has operated on a foundational assumption: its users are adults capable of navigating complex terms of service and managing their own privacy. Yet, as of early 2026, the reality has shifted dramatically. One in three internet users globally is a child. This demographic shift has exposed a fundamental architectural flaw in our digital world. We have built a high-speed highway and invited children to play on it without installing a single crosswalk.

The conflict is not merely technical; it is commercial. The business models of modern tech giants are fueled by data—the more granular, the better. When these models intersect with the developmental vulnerabilities of children, the result is a systemic erosion of privacy and safety. However, a new paradigm is emerging, one that places the "Best Interests of the Child" at the center of the digital experience.

The "Adult-First" Legacy and Its Fallout

The early internet was a frontier of open exchange, designed by researchers and enthusiasts who valued anonymity and freedom. As it evolved into a commercial powerhouse, the focus shifted toward engagement metrics. Algorithms were tuned to keep eyes on screens, and data collection became the currency of the realm.

For an adult, a recommendation engine might be a minor convenience or a mild annoyance. For a child, whose impulse control and critical thinking are still developing, those same engines can lead down rabbit holes of harmful content or create addictive feedback loops. The industry’s reliance on "dark patterns"—user interface designs that trick people into sharing more data than they intended—is particularly effective against younger users who lack the digital literacy to spot them.

The Shift to Data Minimization

At the heart of the movement to protect children is the principle of data minimization. In the past, the burden was on the user to opt-out of tracking. In the new regulatory landscape of 2026, the burden has shifted to the platform to justify why any data is being collected at all.

Data minimization for children means that platforms should only collect the absolute minimum information necessary to provide a specific service. If a child is using a drawing app, the app does not need their GPS location, their contact list, or their browsing history. By treating children’s data as a high-risk category, regulators are forcing a shift from "collect everything" to "protect by default."

The Global Regulatory Wave

We are currently witnessing a global legislative race to catch up with technological reality. The European Union’s Digital Services Act (DSA) has set a high bar, effectively banning targeted advertising to minors based on profiling. In the United Kingdom, the Online Safety Act has matured into a robust framework that requires platforms to perform rigorous risk assessments regarding the safety of their youngest users.

In the United States, the landscape remains more fragmented but is rapidly coalescing. Following the success of California’s Age-Appropriate Design Code, several other states have adopted similar measures, forcing a de facto national standard. These laws share a common thread: they move away from the binary "is this content illegal?" toward a more nuanced "is this design harmful?"

| Regulatory Concept | Old Approach | 2026 Standard (Best Interests) |

|---|---|---|

| Privacy Settings | Opt-in (Hidden in menus) | High-privacy by default |

| Data Collection | Maximum for monetization | Minimum for functionality |

| Algorithms | Optimized for engagement | Optimized for safety and age-appropriateness |

| Verification | Self-declaration (Honesty system) | Privacy-preserving age estimation |

The Role of Artificial Intelligence

As we navigate 2026, the conversation has expanded to include Generative AI. Large Language Models (LLMs) and AI image generators present new risks, from the creation of non-consensual imagery to the delivery of age-inappropriate advice. The challenge for developers is ensuring that AI safety filters are not just broad, but developmentally aware.

An AI that provides a "safe" answer to an adult might still use language or concepts that are confusing or frightening to an eight-year-old. Protecting children’s rights in the age of AI requires "Safety by Design," where guardrails are baked into the model’s training data rather than slapped on as a post-processing filter.

Practical Takeaways for the Digital Ecosystem

For the digital world to become a safe space for children, the responsibility must be shared between platforms, regulators, and guardians. Here is how the transition is taking shape:

For Platforms and Developers:

- Conduct Child Rights Impact Assessments (CRIA): Before launching a feature, analyze how it might specifically affect a child’s privacy or mental health.

- Eliminate Dark Patterns: Remove features like auto-play, infinite scroll, and deceptive notifications that exploit a child's psychological vulnerabilities.

- Implement Age-Preserving Verification: Use technology that confirms a user is a minor without requiring the collection of sensitive identity documents.

For Parents and Educators:

- Utilize Built-in Protections: Most major operating systems now include robust "Family Link" or "Screen Time" features that allow for granular control over data sharing.

- Focus on Digital Agency: Move beyond just "blocking" content. Teach children why certain data is private and how algorithms try to capture their attention.

- Demand Transparency: Support platforms that are transparent about their data practices and avoid those that treat child safety as an optional extra.

A Future Built on Trust

The internet is no longer an optional part of childhood; it is the infrastructure of modern education, socialization, and play. Protecting children’s rights in this space is not about restricting their access to the world, but about ensuring the world they access is not predatory. By prioritizing the "Best Interests of the Child" over the commercial interests of the data economy, we are not just protecting a vulnerable group—we are building a more ethical, transparent, and trustworthy internet for everyone.

Sources

- UN Convention on the Rights of the Child (General Comment No. 25 on the digital environment)

- European Commission: The Digital Services Act (DSA) Compliance Guidelines

- UK Information Commissioner’s Office (ICO): The Age-Appropriate Design Code

- UNICEF: Children’s Rights in the Digital Age Reports

- Federal Trade Commission (FTC): COPPA Rule Updates and Enforcement Trends

See you on the other side.

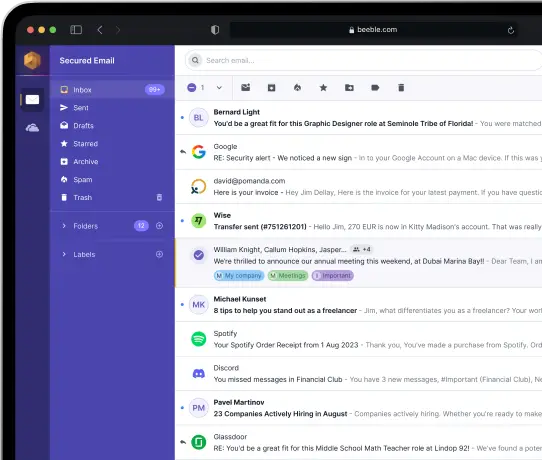

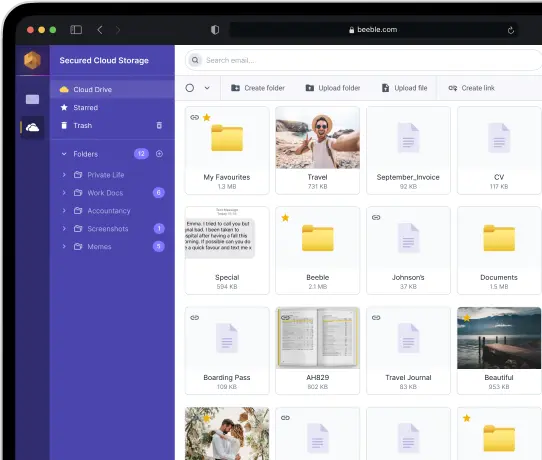

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account