The End of the General-Purpose Era: How Taalas Hardwired the Future of AI

For the last decade, the tech world has operated under a single, expensive assumption: AI requires massive, general-purpose GPUs and a complex software stack called CUDA. This assumption turned Nvidia into the most valuable company on Earth and created a 'compute-debt' that every startup and enterprise had to pay.

On February 19, 2026, that assumption evaporated. The Canadian startup Taalas emerged from stealth to demonstrate what many in the industry thought was impossible—or at least decades away. They didn't just build a faster chip; they built a chip that is the model. By hardwiring the Llama 3.1 8B model directly into the silicon's metal layers, Taalas has effectively bypassed the memory wall, the power crisis, and the Nvidia tax in one fell swoop.

The Death of the Von Neumann Bottleneck

To understand why this matters, we have to look at how traditional chips work. Whether it is an Intel CPU or an Nvidia B200, they all follow the von Neumann architecture: instructions and data are stored in memory (HBM) and shuffled back and forth to the processor. In the world of Large Language Models (LLMs), this shuffling is the primary cause of latency and massive power consumption. We aren't limited by how fast we can compute; we are limited by how fast we can move data.

Taalas has discarded this paradigm. By embedding the weights of Llama 3.1 8B into the upper metal layers of the chip, the model no longer 'loads' from memory. The model is the circuit. This eliminates the need for High Bandwidth Memory (HBM) entirely. Without the constant data movement, the power draw drops significantly, and the speed skyrockets.

17,000 Tokens Per Second: A New Reality

The performance metrics released by Taalas are staggering. A single 250W chip—which can be cooled with a standard air fan—is generating 17,000 tokens per second for a single user. To put that in perspective, a top-tier GPU cluster often struggles to hit a fraction of that speed per individual stream due to the overhead of managing memory and general-purpose kernels.

Because the chip is specialized for one specific model, it doesn't need the 'fat' of a general-purpose processor. There are no unused circuits for graphics rendering or legacy computations. Every square millimeter of the die is dedicated to the inference of Llama 3.1.

| Feature | Nvidia B200 (General Purpose) | Taalas Llama-Specific Chip |

|---|---|---|

| Memory Type | HBM3e (External) | Hardwired (Internal Metal Layers) |

| Cooling | Liquid Cooling Recommended | Standard Air Cooling |

| Throughput | High (Batch Dependent) | 17,000 Tokens/Sec (Single User) |

| Manufacturing Cost | Extremely High | ~20x Lower |

| Flexibility | Runs any model | Hardwired to Llama 3.1 8B |

The 20x Cost Advantage

The most disruptive aspect of the Taalas announcement isn't the speed—it’s the economics. By removing HBM and simplifying the architecture, Taalas claims a manufacturing cost 20 times lower than a comparable GPU setup.

For years, Nvidia’s 'moat' was CUDA—the software layer that made it easy for developers to write AI code. But if the model is already baked into the silicon, you don't need CUDA. You don't need a compiler. You simply feed the chip an input and receive an output. This 'model-as-an-appliance' approach turns AI from a high-maintenance supercomputing task into a commodity hardware component.

From Model to Silicon in 60 Days

The obvious critique of hardwired silicon is rigidity. If you bake Llama 3.1 into a chip today, what happens when Llama 4.0 comes out tomorrow?

Taalas addressed this by revealing their automated 'model-to-lithography' pipeline. They have reduced the time from a finished model checkpoint to a final tape-out-ready design to just two months. While this is still slower than downloading a new weight file from Hugging Face, the trade-off is becoming irresistible for hyperscalers. If a company knows they will be running a specific version of a model billions of times a day, the efficiency of a hardwired chip outweighs the flexibility of a GPU.

The Geopolitical and Industrial Ripple Effect

This shift marks the beginning of the 'Embedded AI' era. We are moving away from centralized 'God-models' running in massive, water-cooled data centers toward specialized, hyper-efficient silicon that can live anywhere.

Imagine an autonomous vehicle with a hardwired vision model that requires zero external memory, or a smartphone that runs a local LLM with the speed of a supercomputer without draining the battery. By lowering the cost of entry by 20x, Taalas is effectively democratizing the hardware layer of the AI revolution.

Practical Takeaways for the AI Industry

The emergence of hardwired AI chips changes the roadmap for every tech leader. Here is what you should consider:

- Evaluate Model Stability: If your business relies on a specific model (like Llama 3.1), it is time to look at ASIC (Application-Specific Integrated Circuit) solutions rather than general-purpose GPU rentals.

- Rethink the 'Moat': If hardware becomes a commodity and CUDA is no longer the gatekeeper, your value must come from proprietary data and fine-tuning, not just access to compute.

- Prepare for the Edge: The reduction in power (250W air-cooled) means high-tier AI is coming to the edge. Start planning for on-premise, high-speed inference that doesn't require a cloud provider.

- Watch the 'Fast-Follower' Models: As the 'model-to-silicon' pipeline shrinks, the advantage of being 'first' to a new model architecture may be eclipsed by the advantage of being the 'most efficient' on a hardwired chip.

Nvidia’s empire was built on the idea that AI is a software problem solved by flexible hardware. Taalas has just argued that AI is a hardware problem solved by inflexible, perfect silicon. If the market follows the efficiency, the era of the GPU king may be drawing to a close.

Sources

- Taalas Official Technical Briefing (February 2026)

- Semiconductor Engineering: The Rise of Hardwired Neural Networks

- Meta AI: Llama 3.1 Architecture and Implementation Standards

- Journal of Applied Physics: Metal-Layer Logic and Memory Integration

See you on the other side.

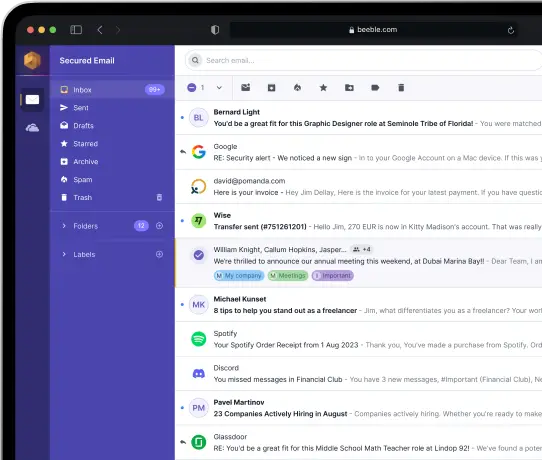

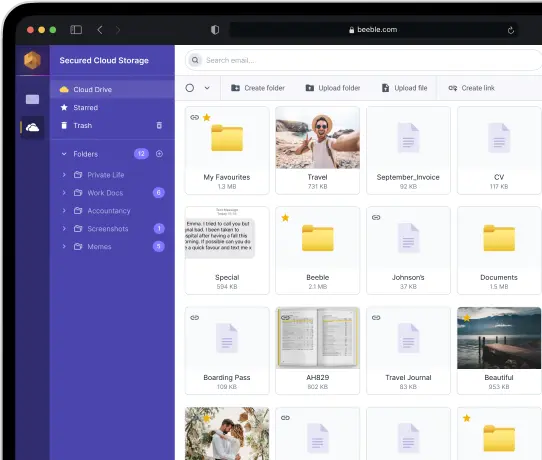

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account