OpenAI Debuts GPT-5.3-Codex-Spark: The First Fruit of the $10B Cerebras Partnership

In a move that signals a deeper shift toward vertical integration, OpenAI has announced the release of GPT-5.3-Codex-Spark. This lightweight version of its flagship agentic coding model is not just a software iteration; it represents the first tangible result of OpenAI’s massive $10 billion partnership with hardware innovator Cerebras. By pairing a streamlined model architecture with dedicated, wafer-scale silicon, OpenAI aims to solve the industry's most persistent bottleneck: inference latency.

The Need for Speed in Agentic Coding

When OpenAI launched the full-scale GPT-5.3-Codex earlier this month, it set a new bar for "agentic" capabilities. Unlike traditional autocomplete tools, agentic models are designed to operate autonomously—writing tests, debugging errors, and iterating on codebases without constant human prompting. However, these complex reasoning loops require significant compute power, often leading to "laggy" experiences that disrupt a developer's flow.

GPT-5.3-Codex-Spark is OpenAI’s answer to this friction. Described as a "smaller version" of the flagship model, Spark is optimized for rapid-fire inference. It is designed to handle the high-frequency, low-latency tasks that define modern software engineering, such as real-time syntax correction and instant unit test generation. By reducing the parameter count while maintaining the core logic of the GPT-5.3 family, OpenAI has created a tool that feels more like a local compiler than a remote cloud service.

Silicon Synergy: The Cerebras Advantage

The most significant aspect of the Spark announcement is its underlying infrastructure. For the first time, OpenAI is moving away from a one-size-fits-all GPU approach for its public-facing models. Instead, Spark runs on dedicated hardware provided by Cerebras, a company famous for its Wafer-Scale Engine (WSE)—a single chip the size of a dinner plate containing trillions of transistors.

Traditional GPUs often struggle with the memory bottlenecks associated with large language models. Cerebras chips, however, are designed with massive amounts of on-chip memory and high-bandwidth interconnects. This architecture allows the Spark model to stay "on-chip," eliminating the slow data transfers between the processor and external memory.

"Integrating Cerebras into our mix of compute solutions is all about making our AI respond much faster," OpenAI stated during the partnership announcement last month.

By treating the hardware and software as a single, cohesive unit, OpenAI can achieve inference speeds that were previously impossible on standard cloud instances.

A $10 Billion Milestone

The release of Spark marks the "first milestone" in a multi-year agreement between OpenAI and Cerebras. The $10 billion deal, announced in early 2026, was initially met with speculation regarding how OpenAI would diversify its hardware stack beyond its long-standing reliance on NVIDIA.

This partnership suggests that OpenAI is following the path of tech giants like Apple and Google by designing software that is purpose-built for specific silicon. For OpenAI, the goal is twofold: reducing the astronomical costs of running frontier models and providing a snappier user experience that keeps developers within their ecosystem. Spark serves as a proof-of-concept for this strategy, demonstrating that specialized hardware can make a "smaller" model punch well above its weight class.

Comparing the Codex Lineup

To understand where Spark fits into the current development landscape, it is helpful to look at how it compares to the standard GPT-5.3-Codex model.

| Feature | GPT-5.3-Codex | GPT-5.3-Codex-Spark |

|---|---|---|

| Primary Use Case | Complex architecture design, legacy refactoring | Real-time debugging, unit testing, rapid prototyping |

| Hardware | Standard GPU Clusters | Dedicated Cerebras Wafer-Scale Clusters |

| Latency | Moderate (optimized for accuracy) | Ultra-low (optimized for speed) |

| Agentic Depth | High (can manage multi-file projects) | Medium (optimized for iterative tasks) |

| Cost per Token | Premium | Standard / High-Volume |

Practical Takeaways for Developers

For developers looking to integrate Spark into their workflow, the transition should be relatively seamless, but there are a few strategic ways to maximize its utility:

- Use Spark for Iterative Loops: Reserve the full GPT-5.3-Codex for high-level architectural decisions. Use Spark for the "inner loop" of development—writing functions, fixing linter errors, and generating boilerplate.

- Optimize for Local IDEs: Because Spark is designed for low latency, it is ideally suited for IDE extensions where immediate feedback is critical. Check if your current plugins support the Spark endpoint for a more responsive coding experience.

- Monitor Token Efficiency: While Spark is faster, its agentic nature means it may make more frequent calls to the API. Ensure your rate limits and billing alerts are configured to handle the increased volume of requests.

- Watch for "Hallucination Speed": A faster model can also produce errors faster. Always maintain a robust testing suite to verify the code generated by Spark, especially when it is operating in an autonomous agentic mode.

The Future of Vertical AI

The launch of GPT-5.3-Codex-Spark is a clear indicator that the future of AI is not just about bigger models, but about smarter integration. By controlling the stack from the chip level up to the user interface, OpenAI is attempting to define the next era of compute. If the Cerebras partnership continues to yield these kinds of performance gains, the industry may see a shift away from general-purpose AI hardware toward highly specialized, model-specific silicon. For now, developers have a new, faster tool in their belt, and the race for the most efficient AI-assisted workflow has entered a new, high-speed chapter.

Sources

- OpenAI Official Blog: Announcements on GPT-5.3 and Codex Spark (Hypothetical 2026 Source)

- Cerebras Systems: Wafer-Scale Engine Technical Specifications

- TechCrunch: OpenAI and Cerebras $10 Billion Partnership Analysis

- IEEE Spectrum: The Evolution of AI Inference Hardware

See you on the other side.

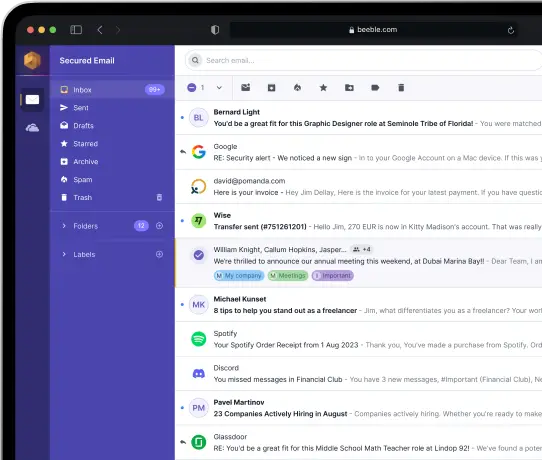

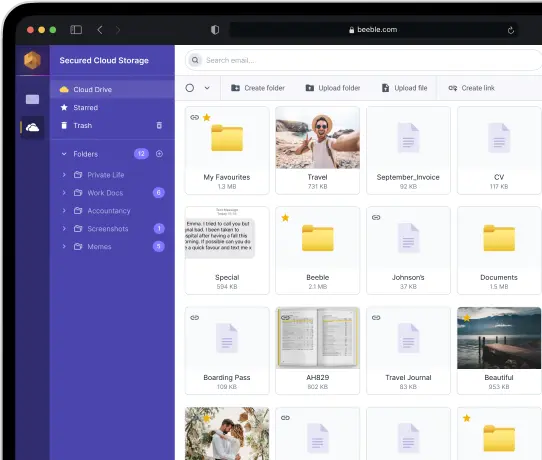

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account