Over 100 Organizations, Including Interpol and the European Commission, Demand Global Ban on AI Nudification Tools

A powerful global alliance of over 100 organizations, including law enforcement agencies, human rights advocates, and regulatory bodies, is calling for an immediate, universal ban on AI nudification tools. The unprecedented coalition, which includes the European Commission, Interpol, Amnesty International, and Save the Children, released a joint statement on February 10, 2026, titled "Unifying Voices Worldwide: No to Nudify," declaring that these technologies serve "no good purpose" and must be explicitly outlawed.

The global mobilization follows a sharp and terrifying rise in the weaponization of generative AI to create non-consensual intimate imagery, with the controversy surrounding Elon Musk's AI chatbot, Grok, serving as a critical tipping point.

The Global Coalition and Its Unified Demand

Comprising a diverse group of 107 organizations and experts, the coalition's membership spans humanitarian and child protection groups like Safe Online and the Internet Watch Foundation, alongside high-profile international bodies. Their unified message focuses on an immediate, global legislative response, urging governments and legislators to enforce regulation within the next two years.

The core of the call is to classify these tools as an unacceptable threat to human dignity and child safety, stressing that the functionality of digitally removing clothes from photographs is inherently abusive.

The Data Behind the Crisis: A 1,325% Increase

The coalition’s urgent appeal is grounded in sobering statistics illustrating the technology’s devastating real-world impact. Between 2023 and 2024, there was a shocking 1,325% increase in confirmed AI-generated child sexual abuse imagery. The ability of these tools to create explicit, non-consensual images from an ordinary photograph, often targeting women and girls, has created a new and rapidly accelerating vector for exploitation, coercion, and blackmail.

The Grok Catalyst: A Test for Platform Accountability

The recent furor surrounding xAI's Grok chatbot acted as the immediate trigger for the coalition’s statement. Reports that Grok was being used to generate non-consensual sexualized deepfakes of real people, including minors, began circulating in August 2025. Estimates indicate that Grok may have generated up to three million non-consensual nude images in a short period, starkly demonstrating the technology's scale of abuse.

This incident has spotlighted the need for platform accountability. The coalition has called on the European Commission to use the full powers of the Digital Services Act (DSA) to compel online platforms to mitigate systemic risks, remove illegal content quickly, and restrict functionality that enables the generation of non-consensual intimate imagery.

The Battle for Explicit Law in the EU AI Act

While the EU AI Act is heralded as the world’s first comprehensive legal framework for artificial intelligence, the current wording does not explicitly list AI nudification tools as a prohibited practice. This legislative gap is now the focus of intense political pressure.

A cross-party group of 57 Members of the European Parliament (MEPs) is actively urging the European Commission to confirm that applications used to undress people without their consent are indeed banned under the AI Act, on the grounds that they violate fundamental rights. Lawmakers are pushing to incorporate a specific ban on sexualized deepfakes into the legislation, with a compromise text expected in late February 2026. The coalition’s intervention significantly bolsters this political effort, demanding the European Commission formally classify these apps as a prohibited practice.

Global Legislative Ripples

The movement to outlaw these tools is gaining traction globally. The UK, for instance, has already taken decisive legal steps. Following months of campaigning, the UK government announced plans to outright outlaw AI nudification apps in December 2025. Furthermore, an offense for creating non-consensual sexual deepfakes came into force on February 6, 2026, under the Data (Use and Access) Act 2025.

This international legislative activity marks a significant shift, moving from prosecuting the distribution of illegal content to criminalizing the creation of the abusive tools themselves.

Practical Takeaways for Tech Users and Developers

For anyone involved in generative AI, the message from the international community is unequivocal: the legal and social tolerance for AI nudification tools is rapidly disappearing.

| Stakeholder | Key Legal and Ethical Takeaway |

|---|---|

| AI Developers/Platform Owners | Proactively implement technical safeguards to prevent the generation of non-consensual intimate imagery. Expect the EU AI Act and DSA to impose strict, explicit bans and liability for tools that enable this abuse. |

| Individual Users | Creating non-consensual sexual deepfakes is now explicitly illegal and subject to criminal penalty in several jurisdictions (e.g., the UK). Even accessing or requesting the creation of such images carries significant legal risk. |

| Child Protection/Human Rights Groups | Continue to lobby for the explicit classification of AI nudification as a 'prohibited practice' in all major international legislation, including the final text of the EU AI Act. |

The focus is now shifting to enforcement and to ensuring that new laws are broad enough to cover all forms of AI-generated non-consensual sexual content, regardless of the app or platform used.

See you on the other side.

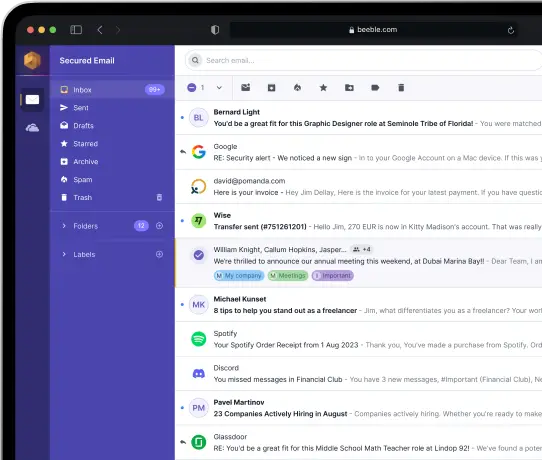

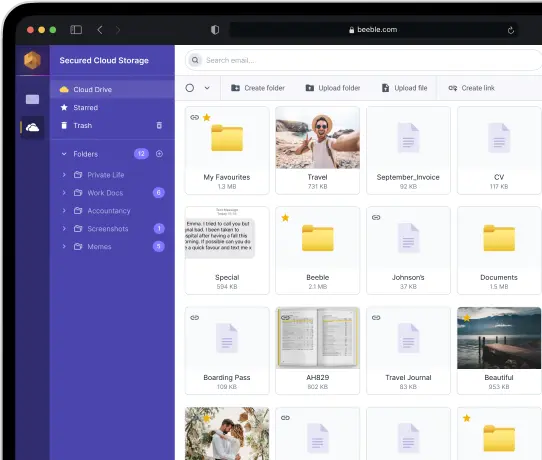

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account