The Concrete Reality of AI: Why Data Centers Are the Tech’s True Monument

While the world debates whether artificial intelligence is a bubble or a generational shift, the answer is being written in steel, concrete, and silicon. For the average user, AI is an ethereal concept—a chat interface or a generative image tool that exists somewhere in the "cloud." But for those monitoring the global economy and infrastructure, AI is increasingly defined by its physical footprint. Data centers have become the most significant real-world evidence of the AI revolution, transforming from quiet server warehouses into the high-voltage powerhouses of a new industrial era.

As of early 2026, the scale of this expansion is unprecedented. We are no longer just building more data centers; we are building a different kind of infrastructure entirely. To understand where AI is going, we have to look at the massive facilities powering it.

The Shift from Storage to Computation

For decades, data centers were primarily digital filing cabinets. Their main job was to store emails, host websites, and run enterprise software. These tasks required reliable power and cooling, but the density of the hardware was relatively manageable. AI has fundamentally changed that architectural blueprint.

Training a modern large language model (LLM) or running complex agentic workflows requires thousands of specialized GPUs working in perfect synchronization. This shift has led to a massive increase in rack density. Where a standard server rack a few years ago might have drawn 10 to 15 kilowatts of power, modern AI-optimized racks are pushing 100 kilowatts or more. This isn't just an incremental upgrade; it is a total reimagining of how buildings are engineered to handle heat and electricity.

The Power Hunger: A New Energy Paradigm

If you want to see the impact of AI, look at the local power grid. The International Energy Agency and various utility providers have noted that data center electricity consumption is doubling in several regions every few years. In some tech hubs, data centers now account for a double-digit percentage of total electricity demand.

This hunger for power is the clearest indicator that businesses are betting their futures on AI. Companies like Microsoft, Google, and Amazon are not just buying chips; they are securing energy contracts decades into the future. We are seeing a resurgence of interest in nuclear energy, specifically Small Modular Reactors (SMRs), as tech giants realize that traditional renewable sources like wind and solar—while essential—cannot always provide the 24/7 "baseload" power required by a massive training cluster.

Cooling the Beast: From Fans to Fluids

One of the most tangible changes inside these facilities is the sound. Traditional data centers are loud, filled with the roar of thousands of high-speed fans pushing air across heat sinks. However, as AI chips become more powerful, air cooling is reaching its physical limits. Air simply cannot carry heat away fast enough to keep the latest processors from throttling.

This has led to the widespread adoption of liquid cooling. Some facilities use "cold plates" that circulate fluid directly over the chips, while others use immersion cooling, where the entire server is submerged in a non-conductive, dielectric liquid. When you walk into a cutting-edge data center today, it looks less like a library and more like a high-tech chemical processing plant. This transition represents billions of dollars in retrofitting and new construction—a physical commitment to the technology that goes far beyond software updates.

The Economic Bet: CapEx as a Signal

Economists often look at Capital Expenditure (CapEx) to see where a company truly believes the future lies. In 2025 and 2026, the CapEx of the "Hyperscalers" has reached staggering levels. We are seeing annual investments in the range of $150 billion to $200 billion across the top four or five players, with the vast majority of that spend dedicated to AI infrastructure.

This investment acts as a gravity well for other industries. It drives the construction business, the copper mining industry, and the specialized cooling market. When a company spends $10 billion on a single data center campus, they aren't experimenting; they are building the foundation for what they believe will be the primary engine of global productivity for the next twenty years.

Geopolitics and the Location of Intelligence

In the past, data centers were built near major fiber-optic hubs or large population centers to reduce latency. While latency still matters for some applications, the massive clusters used for training AI models are being built wherever power is cheapest and most abundant. This is shifting the geography of tech.

Regions with stable grids and cooler climates are becoming the new "silicon prairies." We are seeing massive developments in places that were previously overlooked by the tech industry. This geographic shift is a real-world manifestation of AI’s impact, bringing high-paying jobs, infrastructure taxes, and increased demand for local utilities to new corners of the globe.

Practical Takeaways for Businesses and Investors

As the physical reality of AI continues to expand, businesses should consider the following:

- Infrastructure over Hype: When evaluating the longevity of AI, look at the physical build-out. Software trends can be fickle, but billion-dollar infrastructure projects are long-term commitments.

- Energy Literacy: For IT leaders, understanding your organization's carbon footprint and energy efficiency is no longer optional. The cost of AI is increasingly the cost of electricity.

- Supply Chain Resilience: The demand for specialized components (transformers, switchgear, and cooling systems) often exceeds supply. Planning for AI implementation requires a 24-to-36-month view of hardware availability.

- Sustainability Mandates: As data centers face scrutiny for their resource consumption, companies that prioritize "green" AI—models that are more efficient and run on carbon-neutral infrastructure—will have a competitive advantage.

The Monument of the Digital Age

In the future, we may look back at these massive, humming structures the same way we look at the steam engines of the 19th century or the power plants of the 20th. They are the physical heart of our era. While the output of AI—the text, the code, the medical breakthroughs—is what captures our imagination, the data center is the reality that makes it all possible. It is the most honest evidence we have that the AI revolution is not just coming; it is already built.

Sources:

- International Energy Agency (IEA) - Electricity 2024 Report on Data Center Demand

- U.S. Department of Energy - Office of Energy Efficiency & Renewable Energy (Data Center Trends)

- NVIDIA Investor Relations - Data Center Growth and Blackwell Architecture Specifications

- Uptime Institute - 2025 Global Data Center Survey Results

- Goldman Sachs Research - The AI CapEx Cycle and Infrastructure Spending

See you on the other side.

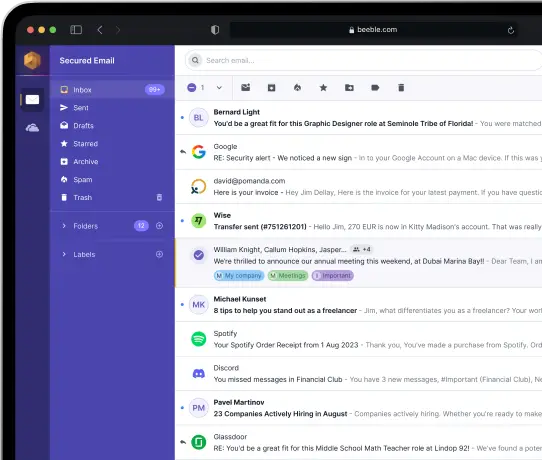

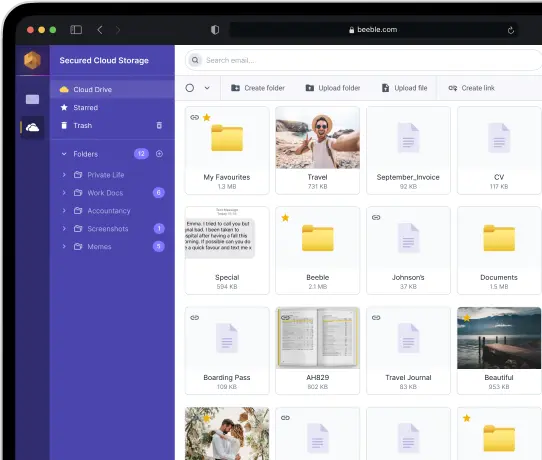

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account