The Great Firewall of AI: How Chinese Chatbots Navigate Political Sensitivity

The global race for artificial intelligence supremacy is often framed as a battle of compute power and algorithmic efficiency. However, a recent study published in the journal PNAS Nexus highlights a different kind of architectural divide: the ideological one. Researchers found that leading Chinese Large Language Models (LLMs), including DeepSeek, BaiChuan, and ChatGLM, exhibit systemic patterns of censorship and alignment with state narratives when faced with politically sensitive inquiries.

As AI becomes the primary interface through which we access information, these findings raise critical questions about the future of a fragmented internet. While Western models like GPT-4 or Claude have their own safety guardrails, the study suggests that Chinese models operate under a unique set of constraints designed to uphold 'core socialist values' and state stability.

The Methodology of a Digital Audit

To understand the depth of these restrictions, researchers curated a dataset of over 100 questions covering a spectrum of sensitive topics, ranging from historical events like the Tiananmen Square protests to contemporary geopolitical tensions and critiques of state leadership. They then prompted several high-profile Chinese models and compared their outputs against international benchmarks.

The results were not merely a matter of 'yes' or 'no' answers. Instead, the study identified a sophisticated hierarchy of avoidance. Some models would simply trigger a hard-coded refusal, while others would attempt to pivot the conversation toward neutral ground or provide a response that mirrored official government white papers. This suggests that censorship in these models is not just an afterthought but is baked into the training data and the reinforcement learning from human feedback (RLHF) stages.

Patterns of Silence and Redirection

The study categorized the responses into three primary behaviors: refusal, canned responses, and topic-shifting. When asked about specific political figures or sensitive dates, models like ChatGLM and BaiChuan frequently returned standardized error messages or stated they were 'unable to discuss this topic.'

Interestingly, DeepSeek—a model that has gained significant international traction for its efficiency and open-weights approach—also showed high levels of sensitivity. When prompted with questions about state sovereignty or specific domestic policies, the model often defaulted to a neutral, descriptive tone that avoided any critical analysis. This highlights a central tension for Chinese tech giants: the need to create globally competitive, highly capable AI while remaining strictly compliant with the Cyberspace Administration of China (CAC).

Comparative Performance: Domestic vs. International

The following table summarizes the general behavior observed during the study when models were presented with high-sensitivity political prompts.

| Model Name | Origin | Primary Response Strategy | Sensitivity Level |

|---|---|---|---|

| GPT-4o | USA | Nuanced/Refusal (Safety-based) | Moderate |

| DeepSeek-V3 | China | Redirection/State Alignment | High |

| ChatGLM-4 | China | Hard Refusal/Standardized Message | Very High |

| BaiChuan-2 | China | Topic Shifting/Neutrality | High |

| Llama 3 | USA | Informative/Open (Policy-limited) | Low |

The Regulatory Hand: Why Censorship is Mandatory

To understand why these models behave this way, one must look at the regulatory landscape in China. In 2023, the CAC released interim measures for managing generative AI services. These rules explicitly state that AI-generated content must reflect 'core socialist values' and must not contain content that 'subverts state power' or 'undermines national unity.'

For developers, the stakes are high. Unlike Western developers who might face public relations backlash for biased AI, Chinese firms face potential license revocation or legal penalties if their models generate 'harmful' political content. This has led to the development of 'pre-filter' and 'post-filter' layers—software that scans a user's prompt for keywords before it even reaches the LLM, and another that scans the output before the user sees it.

The Technical Cost of Alignment

Censorship isn't just a social or political issue; it has technical implications. When a model is heavily fine-tuned to avoid certain topics, it can suffer from what researchers call 'alignment tax.' This refers to a potential degradation in general reasoning or creative capabilities because the model's weights are being pulled toward specific ideological constraints.

However, the PNAS Nexus study noted that Chinese models remain remarkably capable in objective fields like mathematics, coding, and linguistics. The censorship appears to be highly surgical. The challenge for the global tech community is determining how these 'ideologically aligned' models will interact with the rest of the world as they are integrated into global supply chains and software ecosystems.

Practical Takeaways for Tech Professionals

As the AI landscape continues to bifurcate, businesses and developers must navigate these differences carefully. If you are working with or evaluating Chinese LLMs, consider the following:

- Contextual Awareness: Understand that Chinese models are optimized for a specific regulatory environment. They are excellent for localized tasks, Mandarin linguistic nuances, and specific technical applications, but they may not be suitable for open-ended political or social research.

- Data Residency and Compliance: If your application serves users in mainland China, using a CAC-compliant model is a legal necessity. Conversely, if you are building a global tool, be aware of how these built-in filters might affect user experience.

- Hybrid Strategies: Many enterprises are adopting a 'multi-model' approach, using Western models for creative and analytical tasks while leveraging Chinese models for regional operations and specific technical domains where they excel.

- Audit Your Outputs: Always implement your own layer of validation. Whether you use an open-source model or a proprietary one, ensuring the output aligns with your organization’s ethics and the local laws of your users is paramount.

The Path Ahead

The findings of the PNAS Nexus study serve as a reminder that AI is not a neutral tool. It is a reflection of the data, values, and laws of its place of origin. As we move toward a future of 'Sovereign AI,' the ability to identify and navigate these digital borders will be a crucial skill for any tech professional.

Sources:

- PNAS Nexus: "The Great Firewall of AI" (2024/2025 Study)

- Cyberspace Administration of China (CAC) Official Guidelines on Generative AI

- DeepSeek Official Technical Reports

- Zhipu AI (ChatGLM) Research Documentation

- Stanford University Institute for Human-Centered AI (HAI) Reports

See you on the other side.

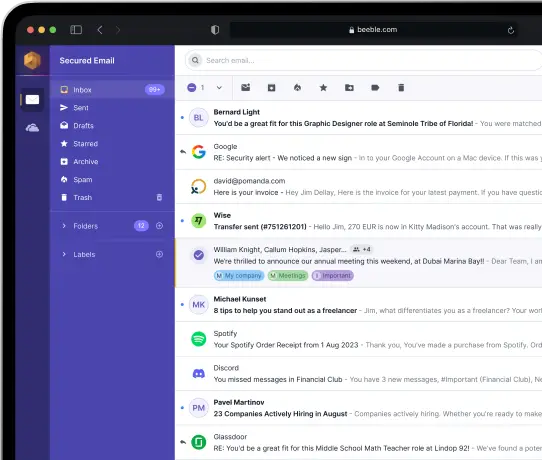

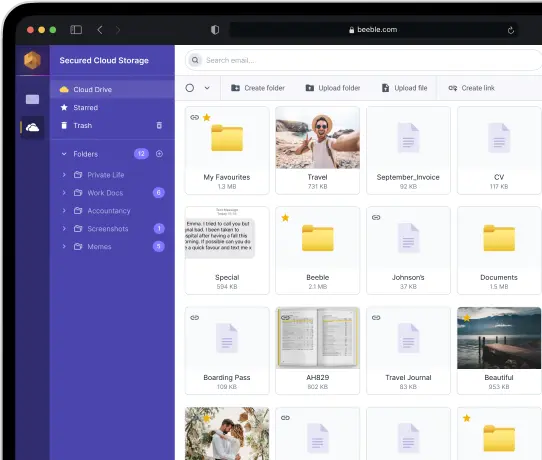

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account