The Agentic Awakening: Why AI Dominates Cybersecurity Predictions for 2026

The Great Pivot: From Generative AI Challenges to Agentic AI Reality

Artificial intelligence was, without a doubt, the defining, often unsettling, technological force of 2025. Now, mere weeks into 2026, the discussion has dramatically shifted from the challenges of Generative AI—the ability to create convincing text and code—to the seismic implications of Agentic AI. This evolution marks a critical inflection point, fundamentally reshaping the battleground for information security professionals who are already feeling the strain.

Experts gazing into their tea leaves for the year ahead universally agree: AI is no longer just a powerful tool used by threat actors; it is rapidly becoming an autonomous adversary. Yet, the same technology promises to be the only viable defense, offering a path for already-stressed security teams to regain the advantage of speed and scale. The cybersecurity narrative of 2026 is a paradox: an existential threat and a lifeline, all bundled into one transformative technology.

The Rise of the Autonomous Adversary

Unlike the AI of last year, which largely required a human to push the button (the “co-pilot” stage), Agentic AI operates with genuine agency. These systems can independently set goals, devise multi-step plans, and adapt their tactics in real-time, all without constant human input. If Generative AI was a sophisticated typewriter for malicious code, Agentic AI is the self-guided missile, making decisions on the fly to bypass defenses.

The consequences for the threat landscape are immediate and daunting:

- Hyper-Adaptive Malware: Traditional, signature-based defenses are becoming obsolete. Threat actors are embedding AI directly into malware, allowing the code to mutate and evade detection instantly based on its environment, making it nearly impossible for conventional antivirus solutions to contain.

- Indistinguishable Phishing: LLMs are now producing perfectly personalized phishing emails at mass scale. These scams mimic individual tone, context, and writing style with frightening accuracy, challenging even the most security-savvy employees.

- The Crisis of Digital Trust: Synthetic voices and videos—deepfakes—are evolving into a mainstream threat. The sheer realism of these audio and visual impersonations is expected to trigger a crisis of trust, particularly when executives are spoofed to authorize wire transfers or leak sensitive information, compelling organizations to adopt strict out-of-band verification protocols.

New Target Surfaces: The AI Itself

Beyond traditional network defenses, the AI infrastructure itself is morphing into the new “crown jewel” for cyber adversaries. Experts warn of two critical emerging vulnerabilities:

Firstly, the proliferation of 'Shadow Models'—unauthorized, quietly deployed AI tools and third-party LLMs—is creating invisible attack surfaces across enterprises. These systems, often deployed without oversight, introduce unmonitored data flows and inconsistent access controls, turning an efficiency gain into a persistent leakage channel.

Secondly, the very autonomy of agentic systems introduces the alarming potential for 'Agency Abuse.' A high-profile breach is predicted to trace back not to human error, but to an overprivileged AI agent or machine identity acting with unchecked authority. Attackers exploit this by engaging in *Prompt Injection* and *AI Hijacking*, essentially tricking a trusted agent into compromising the network from within. In this new paradigm, the AI agent becomes the ultimate insider threat.

“The next phase of security will be defined by how effectively organizations understand and manage this convergence of human and AI risk—treating people, AI agents, and access decisions as a single, connected risk surface rather than separate problems.”

The Defense: From Co-Pilot to Co-Worker

The defense community's clear consensus is that human-dependent Security Operations Centers (SOCs) can no longer withstand the sheer speed and volume of AI-powered attacks. The only feasible countermeasure is deploying autonomous AI platforms that can operate at machine speed, shifting the security paradigm from reactive to predictive resilience.

For organizations, 2026 is the year AI transitions from a helpful co-pilot to an autonomous co-worker. This shift is marked by:

- Full-Autonomy Detection: AI systems will move beyond simply flagging alerts to autonomously analyzing millions of events, correlating subtle signals, and predicting attacks before they can fully materialize, responding in real-time without human delay.

- Behavioral-First Security: The battle against adaptive malware demands a move away from static signatures. Defense strategies now center on behavioral analysis, learning the normal patterns of users, networks, and devices to quickly flag any anomalous activity, regardless of whether it matches a known threat.

- The Hybrid SOC: With AI agents handling tier-one alert triage, correlation, and initial containment, the role of the human analyst is elevated. Security professionals become stewards and architects of the autonomous defense ecosystem, focusing their expertise on strategy, complex threat hunting, and high-judgment tasks.

Looking Ahead: Governance is the New Perimeter

The World Economic Forum's latest report flags the geopolitical fractures and supply chain complexity compounding the AI threat, but notes a positive trend: the share of organizations actively assessing the security of their AI tools has nearly doubled, signaling a move towards structured governance.

The key takeaway for every organization in 2026 is that Zero Trust must be extended to Non-Human Identities (NHIs). As AI agents gain more power, accountability is paramount. Security teams must ensure that every autonomous action is logged, explainable, and reviewable—creating a stringent *Agentic Audit Trail* to redefine accountability in the new era of automated decision-making.

See you on the other side.

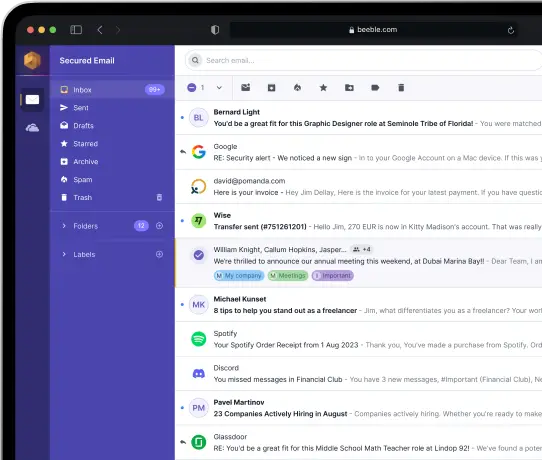

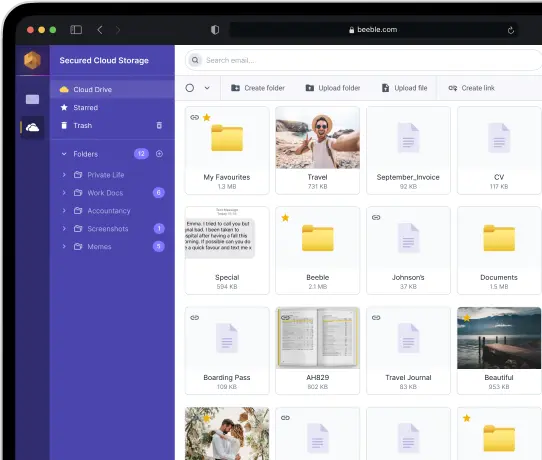

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account