Zuckerberg Takes the Stand: A Landmark Trial for Social Media Safety and Youth Mental Health

In a courtroom that has become the epicenter of a global debate on digital ethics, Meta CEO Mark Zuckerberg is set to testify this week. Unlike the televised congressional hearings of years past, this appearance is part of a high-stakes civil trial. At the heart of the case is a 20-year-old woman identified as KGM, who alleges that the algorithmic designs of Meta and Google platforms fostered a spiral of depressive and suicidal thoughts during her formative years.

This trial represents a pivotal moment for the tech industry. For the first time, the internal mechanics of social media engagement are being scrutinized not just by lawmakers, but by a jury tasked with determining if these platforms are inherently defective products. The outcome could redefine the legal responsibilities of tech giants toward their youngest users.

The Human Cost of Engagement

The plaintiff, KGM, began using social media platforms at the age of 11. Her legal team argues that the platforms' recommendation engines—designed to maximize time-on-site—exposed her to increasingly harmful content related to self-harm and disordered eating. The lawsuit contends that these algorithms functioned like a "digital dopamine loop," making it nearly impossible for a child to disengage even as their mental health deteriorated.

While Meta has long maintained that it provides tools for safety and parental supervision, the prosecution is focusing on the gap between public statements and internal research. They point to the "Facebook Files" and subsequent leaks suggesting that the company was aware of the negative impact Instagram had on teenage girls' body image long before making significant changes to the app's interface.

The Legal Battleground: Design vs. Content

For decades, tech companies have been shielded by Section 230 of the Communications Decency Act, which generally protects platforms from being held liable for content posted by third parties. However, the legal strategy in the KGM case bypasses Section 230 by focusing on product liability.

The argument is simple yet profound: the harm wasn't just the content itself, but the design of the platform. Features like infinite scroll, ephemeral stories, and push notifications are being framed as addictive features that bypass a child’s developing impulse control. By categorizing these features as design defects, the plaintiffs hope to hold Meta accountable for the way their software influences human behavior.

Meta’s Defense and the Industry Response

Meta’s legal team is expected to argue that the responsibility for social media usage lies primarily with parents and that the company has invested billions in safety personnel and technology. They will likely highlight the introduction of "Teen Accounts," which feature automatic private settings and time limits, as evidence of their commitment to protection.

Google, also named in the broader litigation context, maintains that its platforms like YouTube provide educational value and that they have robust age-gating mechanisms in place. The defense's narrative centers on the idea that social media is a reflection of society, not the cause of its ills, and that blaming algorithms oversimplifies a complex mental health crisis driven by various socioeconomic factors.

Why This Testimony Matters Now

Mark Zuckerberg’s testimony is significant because it forces a public accounting of corporate priorities. In previous hearings, Zuckerberg has often pivoted to the benefits of connectivity. In a trial setting, however, he must answer specific questions about the trade-offs made between user growth and user safety.

If the jury finds in favor of KGM, it could open the floodgates for thousands of similar lawsuits currently pending in multidistrict litigation. It could also force a fundamental redesign of how social media works for minors, moving away from engagement-based algorithms toward more transparent, chronological, or safety-first models.

Practical Steps for Digital Safety

While the legal system moves slowly, the risks to youth mental health remain immediate. For parents and educators navigating this landscape, several proactive steps can mitigate the risks associated with heavy social media use:

- Audit Notification Settings: Disable non-essential push notifications to break the cycle of constant checking and "FOMO" (fear of missing out).

- Utilize Native Parental Controls: Both iOS and Android, as well as the apps themselves, now offer robust time-management tools. Setting hard stops for app usage at night can significantly improve sleep quality and mental clarity.

- Encourage Algorithmic Awareness: Teach children that what they see is a curated feed designed to keep them watching, not necessarily a reflection of reality. Understanding the "why" behind a recommendation can help demystify the experience.

- Prioritize "Active" over "Passive" Use: Research suggests that using social media to create and communicate is less harmful than passive scrolling. Encourage kids to use these tools for genuine connection rather than mindless consumption.

Looking Ahead

As the trial progresses, the tech industry is watching closely. We are entering an era where "moving fast and breaking things" is no longer an acceptable mantra when the things being broken are the mental health and well-being of a generation. Whether through court rulings or new legislation, the era of unregulated algorithmic experimentation on children appears to be reaching its end.

See you on the other side.

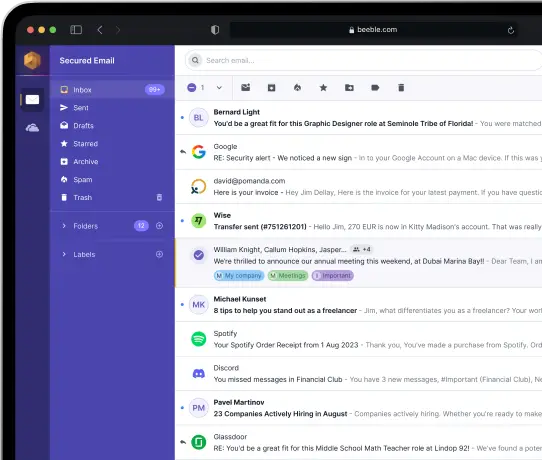

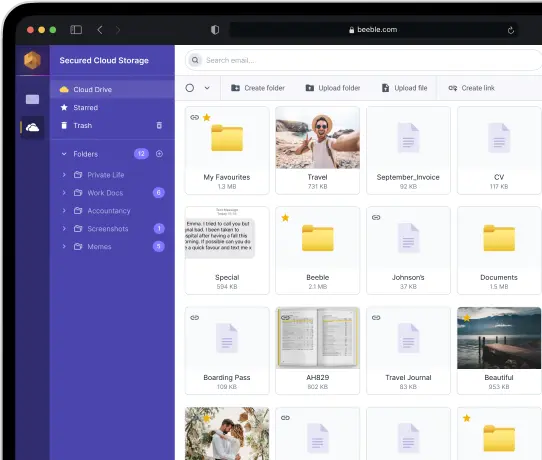

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account