Arista CEO's AMD Comments Trigger Stock Swings for Nvidia and AMD

The Market Reacts to Deployment Shifts

Friday's trading session delivered a stark reminder of how sensitive chip stocks remain to even subtle shifts in datacenter dynamics. Shares of Nvidia fell while Advanced Micro Devices climbed after Arista Networks CEO Jayshree Ullal revealed that her company is witnessing deployment shifts toward AMD processors. The comments, made during an investor call, sent ripples through the semiconductor sector and highlighted the intensifying competition in the AI accelerator market.

Arista Networks, a major provider of cloud networking solutions with deep ties to hyperscale datacenter operators, serves as a bellwether for broader industry trends. When its leadership discusses customer preferences, investors pay attention. Ullal's remarks suggested that at least some datacenter operators are diversifying their GPU procurement strategies, moving away from exclusive reliance on Nvidia's dominant platform.

Understanding Arista's Role in the Datacenter Ecosystem

Arista Networks occupies a unique position in the technology stack. The company provides high-performance switches and networking infrastructure that connect thousands of GPUs within modern AI training clusters. These networks must handle enormous bandwidth requirements as data flows between processors during model training and inference workloads.

Because Arista works closely with datacenter operators during deployment planning, the company gains early visibility into hardware purchasing decisions. When Ullal discusses shifts toward AMD, she's not speculating—she's reporting what her engineering teams are seeing on the ground as they design and install networking infrastructure around specific GPU choices.

This insider perspective makes Arista's observations particularly valuable for understanding competitive dynamics between Nvidia and AMD in the AI accelerator space. The company's customer base includes major cloud providers and enterprises building private AI infrastructure, precisely the segment where AMD has been trying to gain traction.

AMD's Challenge to Nvidia's Dominance

Nvidia has maintained an overwhelming lead in AI accelerators, with its H100 and newer H200 GPUs powering the majority of large language model training runs. The company's CUDA software ecosystem, built over nearly two decades, creates substantial switching costs for developers who have optimized their code for Nvidia's architecture.

AMD, however, has been investing heavily to close this gap. The company's MI300 series, particularly the MI300X accelerator, represents its most serious attempt yet to capture meaningful datacenter AI market share. AMD has emphasized several advantages: competitive performance on specific workloads, potentially better availability amid Nvidia's supply constraints, and pricing strategies that appeal to cost-conscious buyers.

The software challenge remains significant. AMD's ROCm platform continues maturing, but it lacks the ecosystem depth of CUDA. Still, major cloud providers have strong incentives to support multiple vendors. Avoiding single-supplier dependence provides negotiating leverage and reduces supply chain risk—factors that matter enormously when planning multi-billion-dollar infrastructure investments.

What the Deployment Shifts Actually Mean

It's crucial to maintain perspective on what Arista's CEO actually indicated. Ullal spoke about "some deployment shifting," not a wholesale migration away from Nvidia. The phrasing suggests incremental changes rather than a fundamental market realignment.

Datacenter operators typically take a portfolio approach to infrastructure. They might deploy Nvidia GPUs for workloads that absolutely require CUDA compatibility while experimenting with AMD alternatives for tasks where the software ecosystem is less critical. This diversification strategy allows them to test AMD's capabilities without abandoning the proven Nvidia platform.

The stock market reaction, however, amplified these incremental signals. Nvidia's shares have traded at elevated valuations reflecting expectations of continued dominance. Any hint of competitive pressure draws outsized attention from investors looking for inflection points. Conversely, AMD has struggled to demonstrate meaningful traction against Nvidia in AI, making any positive data point valuable for its narrative.

The Broader Competitive Landscape

The AI accelerator market is entering a more complex phase. Beyond the Nvidia-AMD duopoly, several other players are positioning themselves:

Custom silicon from cloud providers themselves poses a long-term threat. Google's TPUs, Amazon's Trainium and Inferentia chips, and Microsoft's Maia accelerators all represent internal alternatives that reduce dependence on external GPU suppliers for certain workloads.

Emerging startups like Cerebras, Groq, and SambaNova are targeting specific niches with novel architectures, though their market share remains minimal compared to the established players.

Intel continues developing its datacenter GPU strategy with the Gaudi line, acquired through the Habana Labs purchase, though its progress has been slower than initially anticipated.

This diversification benefits the industry by reducing concentration risk and spurring innovation. However, Nvidia's entrenched position means challengers face an uphill battle requiring not just competitive hardware but also substantial software investment.

Practical Implications for Tech Buyers and Investors

For enterprises planning AI infrastructure investments, Arista's comments reinforce several practical considerations:

Evaluate multi-vendor strategies. Don't assume Nvidia is the only viable option. Conduct proof-of-concept testing with AMD accelerators for your specific workloads to assess real-world performance and software compatibility.

Consider total cost of ownership. GPU acquisition costs represent only part of the equation. Factor in networking requirements, power consumption, cooling infrastructure, and software development resources needed to optimize for different platforms.

Plan for longer lead times. Supply constraints continue affecting both Nvidia and AMD, though availability varies by product line and customer relationship. Build procurement timelines that account for potential delays.

Monitor software ecosystem development. AMD's ROCm and other CUDA alternatives are improving rapidly. What's impossible today might be feasible in six months as frameworks add better support for alternative platforms.

For investors, the market reaction illustrates how sensitive semiconductor valuations remain to competitive dynamics. Nvidia's premium valuation depends on maintaining its leadership position. Evidence of meaningful share losses—even small ones—could trigger significant repricing. Conversely, AMD must demonstrate it can convert technical capabilities into actual revenue growth in the datacenter segment.

Looking Ahead: Competition Intensifies

The comments from Arista's CEO represent one data point in an evolving story. The AI accelerator market is maturing from a phase of explosive growth driven by a single dominant player toward a more competitive landscape with multiple viable options.

Nvidia still holds overwhelming advantages in software, ecosystem, and installed base. However, the economics of datacenter-scale AI deployment create strong incentives for diversification. As AMD's software stack matures and more developers optimize for multiple platforms, the barriers to adoption will continue falling.

The market's reaction to Ullal's remarks—however outsized—reflects a fundamental truth: the datacenter GPU market is consequential enough that even incremental shifts warrant close attention. Both Nvidia and AMD will report detailed financial results in their upcoming earnings calls, providing more concrete data about whether these deployment shifts are translating into measurable revenue changes.

For now, the takeaway is clear: competition in AI infrastructure is intensifying, and customers are increasingly willing to consider alternatives to the established leader. Whether this represents the beginning of a meaningful market share shift or merely normal diversification within a rapidly growing market remains to be seen.

Sources

- Arista Networks investor relations materials and earnings calls

- Nvidia and AMD datacenter product specifications and documentation

- Semiconductor industry analysis from major financial news outlets

- Cloud provider AI infrastructure documentation

- ROCm and CUDA software platform documentation

See you on the other side.

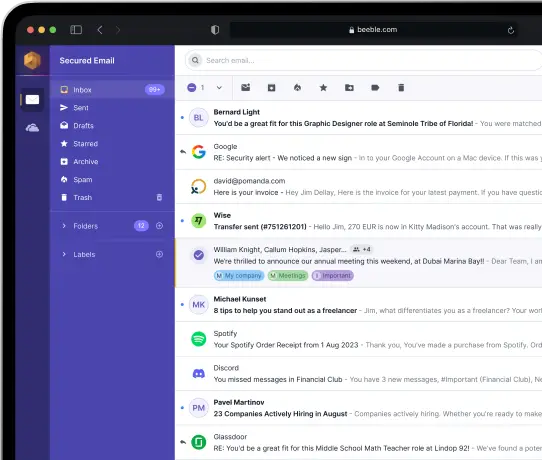

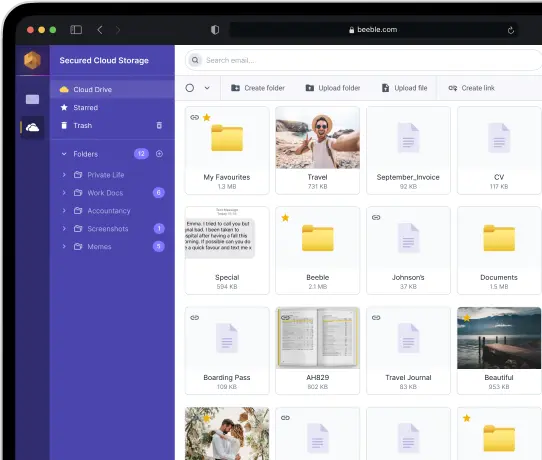

Our end-to-end encrypted email and cloud storage solution provides the most powerful means of secure data exchange, ensuring the safety and privacy of your data.

/ Create a free account